SPECjbb2005 User's Guide

Version 1.07

Last

modified: April 27, 2006

SPECjbb2005 User's GuideVersion 1.07 |

This document is a practical guide for setting up and running a SPEC Java server benchmark (SPECjbb2005) test. To submit SPECjbb2005 results the benchmarker must adhere to the rules contained in the "Run and Reporting Rules" contained in the kit. For an overview of the benchmark architecture, see the SPECjbb2005 White Paper.

This document is targeted at people trying to run the SPECjbb2005 benchmark in order to accurately measure their Java system, comprised of a JVM and an underlying operating system and hardware.

SPECjbb2005 is a Java program emulating a 3-tier system with emphasis on the middle tier. Random input selection represents the first (user) tier. SPECjbb2005 fully implements the middle tier business logic. The third tier is represented by tables of objects, implemented by Java Collections, rather than a separate database.

The motivation behind SPECjbb2005 is that it is, and will continue to be, common to use Java as middleware between a database and customers. SPECjbb2005 is representative of a middle tier system, with simplifications to isolate it for benchmarking. This strategy saves the benchmarker the expense of having to invest in a fast database system in order to measure various JVM systems.

SPECjbb2005 is a follow-on release to SPECjbb2000, which was inspired by the TPC-C benchmark and loosely follows the TPC-C specification for its schema, input generation, and transaction profile. SPECjbb2005 runs in a single JVM in which threads represent terminals, where each thread independently generates random input before calling transaction specific logic. There is neither network nor disk IO in SPECjbb2005.

For a full-fledged multi-tier Java benchmark, SPEC has developed a comprehensive application server benchmark, SPECjAppServer2004.

A warehouse is a unit of stored data. It contains roughly 25MB of data stored in many objects in several Collections (HashMaps, TreeMaps). A thread represents an active user posting transaction requests within a warehouse. There is a one-to-one mapping between warehouses and threads, plus a few threads for SPECjbb2005 main and various JVM functions. As the number of warehouses increases during the full benchmark run, so does the number of threads.

A "point" represents the throughput during the measurement interval at a given number of warehouses. A full benchmark run consists of a sequence of measurement points with an increasing number of warehouses (and thus an increasing number of threads).

This user can configure the number of application instances to run. When more than one instance is selected, several instances will be run concurrently with the final measurement being the sum of those for the individual instances. The multiple application instances are synced using local socket communication and a controller.

This section describes the steps necessary to set up SPECjbb2005. The setup instructions for most platforms are very similar to these generic setup instructions.

Make sure Java is correctly installed on the test machine.

Put the CD in the CDROM drive.

Mount it (if necessary) and enter the top-level cdrom directory.

Run InstallShield:

java setup

or double click on setup.exe

Follow the instructions from there.

Alternately, to run without the GUI, use the following command:

java setup -o <installation directory>

There should now be two jar files (jbb.jar and check.jar), documentation in doc, and source and class files in src/spec/jbb and src/spec/reporter.

Do NOT recompile (javac).

You are expected to use the bytecodes provided. Recompiling the benchmark will cause validation errors.

Try running the benchmark using either

run.sh

or

run.bat

as appropriate to your operating system. These are provided as

examples, and may require minor modifications for your particular

environment. Go to the directory containing the

benchmark.

Alternately, set

CLASSPATH=./jbb.jar:/check.jar:$CLASSPATH

for Unix or

CLASSPATH=.\jbb.jar;.\check.jar;%CLASSPATH%

for Windows.

To run the benchmark up to 8 warehouses (the

default configuration of the SPECjbb.props) execute the command:

java –Xms256m –Xmx256m spec.jbb.JBBmain -propfile SPECjbb.props

The benchmarker will probably not want to keep these numbers, since the heap is fairly small and the properties files (SPECjbb.props and SPECjbb_config.props) don't yet reflect the system under test.

Having done a trial run the benchmark, the benchmarker should now learn what the changeable parameters are, and where to find and change them in the properties files. Also, the documentation (and publishability) of the runs will be improved by editing the descriptive property file described below.

SPECjbb2005 takes two properties files as input: a control properties file and a descriptive properties file. The control properties file is used to modify the operation of the benchmark, for example altering the length of the measurement interval. The descriptive properties file is used to document the system under test; this documentation is required for publishable results, and is reflected in the output files. The values of the descriptive properties do not affect the workload.

The default name for the control properties file is SPECjbb.props, but may be overridden with a different name by using the -propfile command line option (see section 3 for an example). The name for the descriptive properties file is specified in the control properties file, using the input.include_file property. The default name, as distributed in the sample control properties file included with the kit, is SPECjbb_config.props. See below for a brief description of how to modify properties in these files.

A sample control properties file and a sample descriptive properties file are distributed with the SPECjbb2005 kit, using the default names. Before modifying these files, first make a copy of the originals to enable easy recovery of the default benchmark configuration. Also, if running on several platforms, the benchmarker will probably want to take advantage of the naming features described in the previous paragraph (e.g., SPECjbb_config.ALPHA_400.props, SPECjbb_config.NT_500.props).

Each line of a properties file is either blank, a comment, or a property assignment. Comments begin with the # character. Each property assignment is of the form name=value. "name" is the property identifier; "name" and "property" are often used synonymously. Property names are specific to the benchmark and must not be changed. See below for a discussion of the control properties that the benchmarker is likely to want to change and for a list of control properties that must not be changed in order for the SPECjbb2005 result to be publishable. See section 4 for examples of the specification of property values.

The control properties file allows modification of three distinct benchmark behaviors: length of run, warehouse sequence, and garbage collection behavior. The benchmarker may experiment with any of these behaviors, but for publishable results, there are restrictions on the modifications. See the following paragraphs for these restrictions. The control properties file also contains a property specifying the benchmark name, but this should not be changed.

Warehouse sequence may be controlled in either of two ways. The usual method for specifying warehouse sequence is the set of three properties, input.starting_number_warehouses, input.increment_number_warehouses, and input.ending_number_warehouses , which causes the sequence of warehouses to progress from input.starting_number_warehouses to input.ending_number_warehouses, incrementing by input.increment_number_warehouses. The alternative method of specifying warehouse sequence is input.sequence_of_number_of_warehouses, which allows specification of an arbitrary list of positive integers in increasing order. For a publishable result the warehouse sequence must begin at 1 and increment by 1 . See Section 4.1 for a discussion of requirements on the total number of warehouses that must be run.

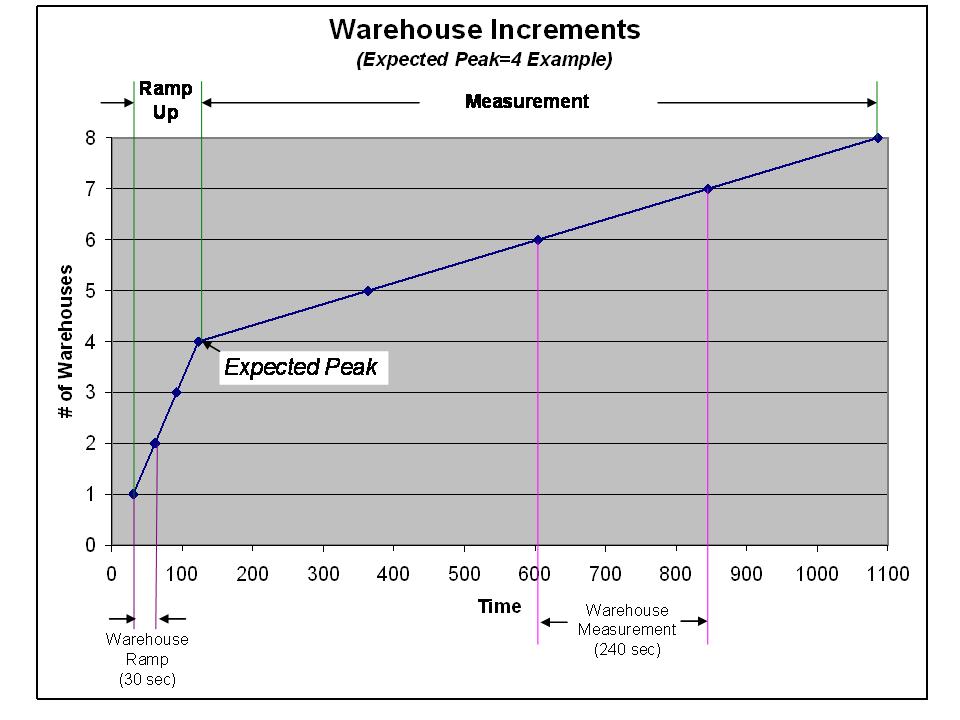

The length of the run is controlled by the properties: input.per_jvm_warehouse_rampup, input.per_jvm_warehouse_rampdown specifies, in seconds the ramp up and ramp down time for each JVM instance, input.ramp_up_seconds, input.measurement_seconds, and input.expected_peak_warehouse. input.ramp_up_seconds specifies, in seconds, the length of each warehouse interval before the expected_peak_warehous, and input.measurement_seconds specifies, in seconds, the length of each warehouse from there on.. For a result to be publishable input.ramp_up_seconds must be 30 and input.measurement_seconds must be 240.

After completing setup as described in the previous section, the benchmark is ready to run.

Try running the benchmark using either

run.sh

or

run.bat

as appropriate to your operating system. These are provided as

examples, and may require minor modifications for your particular

environment.

Alternately, set CLASSPATH:

CLASSPATH=./jbb.jar:./check.jar: $CLASSPATH

Or

CLASSPATH=.\jbb.jar:.\check.jar: %CLASSPATH%

then run the following line:

java -Xms<min> -Xmx<max> spec.jbb.JBBmain -propfile SPECjbb.props

Most operating systems should be able to use a similar command line. Specifying more heap is allowed, and will probably help to get higher scores.

The benchmark output appears in the results directory for a single-JVM run and in a created subdirectory of that for a multi-JVM run by default. The output types are raw, results, html, and text, one of each for each JVM instance. The files are tagged with a sequential number, as

SPECjbb.<num>.raw,

SPECjbb.<num>.results,

SPECjbb.<num>.html,

and SPECjbb.<num>.txt

respectively. The benchmark also produces SPECjbb.<num>.jpg, which SPECjbb.<num>.html refers to..

Usually one doesn't need to run the Reporter manually, as the benchmark automatically creates all the file formats. However, if needed, a raw file can be processed with the Reporter tool to create: html with an html graph, html with a jpg graph, or nicely-formatted text. The html with a jpg graph requires Java 2. The html with an html graph is provided for those without Java 2. When the benchmark calls the Reporter, it will default to that which is available in the environment.

Additionally, the Reporter can be used to compare two results.

The most common way of running the Reporter is

java spec.reporter.Reporter -e -r results/SPECjbb.<num>.raw -o

results/SPECjbb.<num>.html

There are a number of other options:

Usage: java spec.reporter.Reporter [options]

Required options are:

-r Results A SPEC results file, generated by a benchmark run.

May be in a mail message with mail headers.

Other options are:

-a Plain ASCII text output

default: generate HTML output with JPG graph

-c Second raw file, to compare

default: none

-e Do NOT echo raw results properties in HTML output

default: raw results inserted as HTML comments

-h Create graph in HTML rather than JPG

default: use JPG if have Java2

-l Label Label to infix into the JPG name: SPECjbb.label.jpg

default: a random number

-o Output Output file for generated HTML

default: written to System.out

-v Verbose. List extra spec.testx.* properties

default: extra properties are not reportedSo, comparing two results is done as follows:

java spec.reporter.Reporter -e -r results/SPECjbb.<num>.raw -c

results/SPECjbb.<other-num>.raw -o results/compare.htmlThe Multi JVM runs, use the reporter as follows:

java spec.reporter.MultiVMReporter -a -r results -o multi-vm-report.txt

Usage: java spec.reporter.MultiVMReporter [options]

Required options are:

-r ResultsDir Directory with results files

Other options are:

-a Plain ASCII text output

default: generate HTML output with JPG graph

-e Do NOT echo raw results properties in HTML output

default: raw results inserted as HTML comments

-o Output Output file for generated HTML or TXT

default: index.html for HTML, MultiVMReport.txt for TXT

(NB: html links are generated as relative to the

directory with results files.)Results submitted to SPEC will appear on the SPEC website in the asc and html(-jpg) formats. The reporter output has to be redirected or specified with -o filename. The Reporter will use only the last value when the same point (number of warehouses ) is repeated multiple times

Section 2 introduced the properties files. These can be modified to control operation of the benchmark. There are two properties files: SPECjbb.props and SPECjbb_config.props. Their relationship is described in more detail in Section 2. Section 2 also describes the general format of the properties lines, and how to change them.

------------------------------SPECjbb.props--------------------------------

#########################################################################

# #

# Control parameters for SPECjbb benchmark #

# #

#########################################################################

#

# This file has 2 sections; changable parameters and fixed parameters.

# The fixed parameters exist so that you may run tests any way you want,

# however in order to have a valid, reportable run of SPECjbb, you must

# reset them to their original values.

#

#########################################################################

# #

# Changable input parameters #

# #

#########################################################################

# Warehouse sequence may be controlled in either of two ways. The more

# usual method for specifying warehouse sequence is the triple

# input.starting_number_warehouses, input.increment_number_warehouses,

# and input.ending_number_warehouses, which causes the sequence of

# warehouses to progress from input.starting_number_warehouses to

# input.ending_number_warehouses, incrementing by

# input.increment_number_warehouses.

# The alternative method of specifying warehouse sequence is

# input.sequence_of_number_of_warehouses, which allows specification of

# an arbitrary list of positive integers in increasing order.

# For a publishable result the warehouse sequence must begin at 1,

# increment by 1 and go to at least 8 warehouses

# The expected_peak_warehouses defaults to the result of the runtime call

# Runtime.getRuntime.availableProcessors()

# It can be overridden here but then a submission should include an explanation

# in the config.sw.notes field

input.jvm_instances=1

input.starting_number_warehouses=1

input.increment_number_warehouses=1

#input.ending_number_warehouses=2

#The default value for input.expected_peak_warehouse

#is Runtime.getRuntime.availableProcessors

#If you modify this property, the result must be submitted for review before publication

#and an explanation should be included in the Notes section. See the run rules for details.

#input.expected_peak_warehouse =0

#input.sequence_of_number_of_warehouses=1 2 3 4 5 6 7 8

#

# 'show_warehouse_detail' controls whether to print per-warehouse

# statistics in the raw results file. These statistics are not used

# in the final report but may be useful when analyzing a JVMs behavior.

# When running a large number of warehouses (i.e. on a system with a

# lot of CPUs), changing this to true will results in very large

# raw files. For submissions to SPEC, it is recommended that this

# attribute be set to false.

#

input.show_warehouse_detail=false

#

# 'include_file' is the name for the descriptive properties file. On

# systems where the file separator is \, use \\ as the file separator

# here.

#

# Examples:

# input.include_file=SPECjbb_config.props

# input.include_file=/path/to/SPECjbb_config.props

# input.include_file=c:\\path\\to\\SPECjbb_config.props

#

input.include_file=SPECjbb_config.props

#

# directory to store output files. On systems where the file separator

# is \, use \\ as the file separator here.

#

# Examples:

# input.include_file=results

# input.include_file=/path/to/results

# input.include_file=c:\\path\\to\\results

#

input.output_directory=results

#########################################################################

# #

# Fixed input parameters #

# #

# YOUR RESULTS WILL BE INVALID IF YOU CHANGE THESE PARAMETERS #

# #

#########################################################################

# DON'T CHANGE THIS PARAMETER, OR ELSE !!!!

input.suite=SPECjbb

# Benchmark logging level

input.log_level=INFO

# Deterministic Random Seed (required value is false)

input.deterministic_random_seed=false

# rampup and rampdown specify rampup and rampdown for multi-jvm mode

# only. (seconds)

input.per_jvm_warehouse_rampup=3

input.per_jvm_warehouse_rampdown=20

#

# If you need to collect stats or profiles, it may be useful to increase

# the 'measurement_seconds'. This will, however, invalidate your results

#

# Amount of time to run each point prior to the measurement window

input.ramp_up_seconds=30

# Time of measurement window

input.measurement_seconds=240

------------------------------end of SPECjbb.props-------------------------------- ------------------------------SPECjbb_config.props-------------------------------- # # SPECjbb properties file # # This is a SAMPLE file which you can use to specify characteristics of # a particular system under test, and to control benchmark # operation. You can reuse this file repeatedly, and have a version for # each system setup you use. You should edit the reporting fields # appropriately. All of this can still be edited in the output properties # file after you run the test, but putting the values in here can save # you some typing for attributes which do not change from test to test. # ######################################################################### # # System Under Test hardware # ######################################################################### # Company which sells the hardware config.hw.vendor=Neptune Ocean King Systems # Home page for company which sells the hardware config.hw.vendor.url=http://www.neptune.com # What type of system was used config.hw.model=TurboBlaster 2 # What type of processor(s) the system had config.hw.processor=ARM # MegaHertz rating of the chip. Usually an integer config.hw.MHz=300 # Number of chips in the system as configured config.hw.nchips=1 # Number of cores in the system as configured config.hw.ncores=2 #Number of cores/chips config.hw.ncoresperchip=2 # Number of HW Threads configured config.hw.hwThreads=4 # Amount of physical memory in the system, in Megabytes. DO NOT USE MB # or GB, IT WILL CONFUSE THE REPORTER config.hw.memory=4096 #Dexscribe the memory in the system - e.g. 4 of 8 DIMMs filled with 1GB DDR 2100 CAS3 memory config.hw.memory_description=punch cards # Amount of level 1 cache for instruction and data on each CPU config.hw.primaryCache=4KBI+4KBD # Amount of level 2 cache, for instruction and data on each CPU config.hw.secondaryCache=64KB(I+D) off chip # Amount of level 3 cache (or above) config.hw.otherCache= # The file system the class files reside on config.hw.fileSystem=UFS # Size and type of disk on which the benchmark and OS reside on config.hw.disk=1 x 4GB SCSI (classes) 1 x 12GB SCSI (OS) # Any other hardware you think is performance-relative. That is, you # would need this to reproduce the test config.hw.other=AT&T Rotary Telephone config.hw.available=Jan-2000 ######################################################################### # # System Under Test software # ######################################################################### # The company that makes the JVM software config.sw.vendor=Phobos Ltd # Home page for the company that makes the JVM software config.sw.vendor.url=http://www.phobos.uk.co # Name of the JVM software product (including version) config.sw.JVM=Phobic Java 1.5.0 # Date when the JVM software product is shipping and generally available # to the public config.sw.JVMavailable=Jan-2000 # How many megabytes used by the JVM heap. "Unlimited" or "dynamic" are # allowable values for JVMs that adjust automatically config.sw.JVMheapInitial=256 config.sw.JVMheapMax=1024 #Is the JVM 32- or 64-bit? (or some other number?) config.sw.JVMbitness=32 # Command line to invoke the benchmark # On systems where the file separator is \, use \\ as the file separator # here config.sw.command_line=java -ms256m -mx1024m -spec.jbb.JBBmain -propfile Test1 # Operating system (including version) config.sw.OS=Phobos DOS V33.333 patch-level 78 # Date when the OS version is shipping and generally available to the # public config.sw.OSavailable=May-2000 # Free text description of what sort of tuning one has to do to either # the OS or the JVM to get these results. This is where kernel tunables # belong. Use HTML list tags, if you want a list on the report page config.sw.tuning=Operating system tunings<UL><LI>bufcache=1024</LI><LI>maxthreads_per_user=65536</LI></UL> #Text explanation of the Ahead-of-Time Compilation mechanism config.sw.aot=<p>precompiled code for<ul><li>Deliverytransaction.process</li><li>TransactionManager.go</li></ul>loaded at startup</p> # Any additional software that you think is need to reproduce the # performance measured on this test config.sw.other=Neptune JIT Accelerator 2.3b6 # Date when the other software is shipping and generally available to # the public config.sw.otherAvailable=Feb-2000 ######################################################################### # # Tester information # ######################################################################### # The company that ran and submitted the result config.test.testedBy=Neptune Corp. # The person who ran and submitted this result (name does not go on # public pages config.testx.testedByName=Willie the Mailboy # A web page where people within the aforementioned company might get # more information config.test.internalReference=http://pluto.eng/specpubs/mar2000/ # The company's SPEC license number config.test.specLicense=50 # Physically, where the results were gathered config.test.location=Santa Monica, CA #Notes config.sw.notes="Notes here" ------------------------------end of SPECjbb_config.props-------------------------

These parameters can be categorized as:

Control parameters - Variables that describe the load to put on the server. Several of these are "constants" in the sense that a particular value is required for use in publishable runs. These are included in the control properties file, SPECjbb.props .

Configuration description - Details about the system that are necessary for the final report, such as CPU types, memory sizes, etc.

As distributed in the sample kit, these are in the included properties file, SPECjbb_config.props .

These are the parameters which specify the workload.

jvm_instances

The number of JVM instances in the benchmark run.

starting_number_warehouses, increment_number_warehouses, ending_number_warehouses, expected_peak_warehouse

Parameters to specify a series of numbers of warehouses to run at once. For example, with the settings start=1, increment=1, and end=10 respectively, the benchmark will run first 1 warehouse, then 2 concurrent warehouses, then 3, and so on up to 10. Since the metric averages points from the expected peak to twice as many warehouses, the benchmark must be run to at least twice that number of warehouses.

If the expected_peak_warehouse is not specified it will take the value of the result of the call Runtime.getRuntime.availableProcessors(). In the case where you override this default by specifying a value, any result must be submitted to the SPEC Java subcommittee before announcement of the results, and an explanation of the change shoud be put in the notes section of the report. An example of an acceptable reason to override the default value would be if the availableProcessors() call does not return an accurate or valid value for the hardware architecture of the SUT. An example of an unacceptable reason would be decreasing the value from the default to hide scalability problems and artificially obtain a higher score.

Note: note that publication requires all the points from 1 to 2*n warehouses, where n is expected peak warehouse, and that the metric is computed from the points n to 2*n. In some cases, where the system under test is unable to successfully run all points up to 2*n, the runs may still be valid and publishable. See section 2.3 of the run and reporting rules.

input.sequence_of_number_of_warehouses=7 10 12

An alternative to the above. If using this, comment out one of starting_number_warehouses, increment_number_warehouses, or ending_number_warehouses. This allows skipping numbers of warehouses in a non-periodic fashion, or repeating a number of warehouses more than once. The list is assumed to be increasing; if it is not, the results may not appear as intended.

input.include_file=SPECjbb_config.props

The name of a file which contains other input parameters. The intended use is to allow segregation of "config" parameters from "input" parameters, easing changing one set without the other. This way, one can have several system configuration files for different systems, and simply change which one to include for a given run. Similarly, one system can easily have several sets of input parameters all referencing the same hardware/software descriptions. In fact, the parameters may be divided between this file and the specified propfile some other way if you want. Be careful not to lose any parameters. Duplicated parameters will take on the last value seen, with the include file following the propfile.

The next four are "constants". Normally, you will not change these. They are provided for information only. Any changes to the benchmark constants will invalidate the run. SPEC does not endorse such changes, and obtained with modified benchmark constants are not publishable.

The examples shown are the default and also the required values for valid runs.

per_jvm_warehouse_rampup=3

The ramp up time for each JVM instance

per_jvm_warehouse_rampdown=20

The ramp down time for each JVM instance

input.ramp_up_seconds=30

The length in seconds of each warehouse interval before the expected_peak_warehouse. This must be 30 for a publishable run.

input.measurement_seconds=240

The length in seconds of each warehouse interval from the expected peak warehouse to the end of the run. This must be 240 for a publishable run.

The Configuration description section should contain a full description of the testbed. It should have enough information to repeat the test results, including all necessary hardware, software and tuning parameters. All parameters with non-default values must be reported in the Notes section of the description.

The configuration description has the following categories.

Server Hardware

JVM Software

Test Information

Each category contains variables for describing it. For example, Hardware contains variables for CPUs, caches, controllers, etc. If a variable doesn't apply, leave it blank. If no variable exists for a part you need to describe, add some text to the notes section. The notes sections can contain multiple lines.

The properties file included in the kit contains examples for filling out these fields.

Hardware:

config.hw.vendor=SAMPLE vendor

This is the company which sells the hardware.

config.hw.vendor.url=http://www.neptune.com

This is web page for the company which sells the hardware.

config.hw.model=TurboBlaster 2

What type of system was used.

config.hw.processor=ARM

What type of processor the system had.

config.hw.MHz=300

MegaHertz rating of the chip. Usually an integer, except for Intel.

config.hw.nchips=1

The number of chips in the system.

config.hw.ncores=2

The number of cores in the system.

config.hw.ncoresperchip=2

The number of cores/chip.

config.hw.hwThreads=4

The number of HW Threads configured.

config.hw.memory=4096

The amount of physical memory in the system under test, in megabytes. That's important to the reporter; saying "4G" or "2Gb" will confuse it, and your memory will be reported incorrectly.

config.hw.memory_description=punch cards

The memory layout and components. Key information to be included are the number of memory slots in the system, and the type of memory in them.

config.hw.primaryCache=4KBI+4KBD

The amount of level 1 cache, for instruction and data, on each CPU.

config.hw.secondaryCache=64KB(I+D)

The amount of level 2 cache, for instruction and data, on each CPU. This example shows a combined cache.

config.hw.otherCache=none

Level 3 cache or above.

config.hw.fileSystem=UFS

The file system the classes reside on.

config.hw.disk=2 x 4GB SCSI

Size and type of disk on which the benchmark and the OS reside on. This is to help with reproduction of results.

config.hw.other=none

Anything you think is performance-relevant hardware-wise that someone would need to be told about in order to reproduce the result.

config.hw.available=Jan-2000

The date when the hardware is shipping and generally available to the public.

Software:

config.sw.vendor=Phobos Ltd

This is the company which sells the JVM software.

config.sw.vendor.url=http://www.phobos.uk.co

This is the web page for the company which sells the JVM software.

config.sw.JVM=Phobic Java

This is the name of the JVM software product.

config.sw.JVMavailable=Jan-2000

The date when the product is shipping and generally available to the public.

config.sw.JVMheapInitial=256

How many megabytes of memory were used for the initial heap (-ms). "Unlimited" or "dynamic" are also allowed for JVMs which adjust automatically.

config.sw.JVMheapMax=1024

How many megabytes of memory were used for the maximum heap (-mx). "Unlimited" or "dynamic" are also allowed for JVMs which adjust automatically.

config.sw.JVMbitness=32

Specifies whether the JVM is 32- or 64-bit ? (or some other number).

config.sw.command_line=java -ms256m -mx1024m -spec.jbb.JBBmain -propfile Test1

The command line used to invoke the benchmark.

config.sw.OS=Phobos

The operating system on the system under test.

config.sw.OSavailable=Jan-2000

The date when the operating system is shipping and generally available to the public.

config.sw.tuning=none

Free text description of what sort of tuning one has to do to either the OS or the JVM to get these results. This is where kernel tunables belong.

config.sw.aot=none

Text explanation of the Ahead-of-Time Compilation mechanism.

config.sw.other=Neptune JIT Accelerator 2.3b6

Any additional software that you think is performance-relevant that someone would need to be told about in order to reproduce.

config.sw.otherAvailable=Feb-2000

When it's shipping and generally available.

And, the test information:

config.test.testedBy=Neptune Corp.

The company that ran and submitted this result.

config.testx.testedByName=Willie the Mailboy

The person who ran and submitted this result.

config.test.internalReference=http://pluto.eng/specpubs/mar2000/

A web page where people within the aforementioned company might get more information.

config.test.specLicense=50

The company's SPEC licence.

config.test.location=Santa Monica, CA

Physically, where the results were gathered.

In order to be a publishable result, or directly comparable to existing published results, a run must pass several runtime validity checks:

The checksum on jbb.jar must indicate that it is the same bytecodes which SPEC shipped.

Elapsed time of each measurement interval shall be within 0.5% lower to 10% higher than the specified measurement interval length (240 seconds).

The run must include all points to the minimum of 8 warehouses or twice as many warehouses as at the expected peak.

The Java environment must pass the partial conformance testing done by the benchmark prior to running any points.

SPECjbb2005 produces two throughput metrics, as follows:

The total throughput measurement, SPECjbb2005 bops

The average throughput per JVM instance, SPECjbb2005 bops/JVM

The throughput metrics are calculated as follows:

For each JVM instance, all points (numbers of warehouses) are run, from 1 up to at least twice the number N of warehouses expected to produce the peak throughput. At a minimum all points from 1 to 8 must be run.

For all points from N to 2*N warehouses, the scores for the individual JVM instances are added.

The summed throughputs for all the points from N warehouses to 2*N inclusive warehouses are averaged. This average is the SPECjbb2005 bops metric. As explained in section 2.3 of the run and reporting rules, results from systems that are unable to run all points up to 2*N warehouses are still considered valid. For all the missing points in the range N to 2*N, the throughput is considered to be 0 SPECjbb2005 bops in the metric computation.

The SPECjbb2005 bops/JVM is obtained by dividing the SPECjbb2005 bops metric by the number of JVM instances.

The reporting tool contained within SPECjbb2005 produces a graph of the throughput at all the measured points with warehouses on the horizontal axis and the summed throughputs on the vertical axis. All points from 1 to the minimum of 8 or 2*N are required to be run and reported. Missing points in the range N to 2*N will be reported to have a throughput of 0 SPECjbb2005 bops. The points being averaged for the metric will be marked on the report.

Benchmark results are available in several forms:

Results files

Html file

Raw file

Text report page

All of these are just different ways of presenting the same basic information. All include the SPECjbb2005 metrics, the total scores at individual numbers of warehouses, validation or error messages, information about the configuration, information about the input parameters, and details about each point.

For this reason, these are described in detail only once, in the Html Result Pages section. The descriptions of the other formats will refer back to classifications of information described in the HTML section. Sample output pages are included with the kit ( Text, HTML-jpeg, HTML-only, raw, results. ) Errors in the creation of any format (other than raw) do not prevent publication.

How to create the other formats from the raw file is described in Section 3.3 .

A benchmark run will generate one raw file pre JVM instance. The raw file(s) will be put in a subdirectory of the results directory with a name of the form SPECjbb.xxx where xxx is a numeric suffix. The SPECjbb.yyy.raw (yyy are numeric, with one for each JVM instance) files in that subdirectory contain all the inputs from the properties files and results from the test. The reporter tool uses this file to generate all the other file formats. The raw files are submitted to SPEC. ( See Submitting Results )

If you intend to postprocess the results, this is the best file to start from. All information is stored in field=value pairs. It can be read in to a Java program using the Properties class, or handled by Perl, awk, or shell using pattern match or grep.

The HTML result pages contain the following elements.

See Section 6 for to see how the metrics are calculated.

Performance for each point, in the form of a chart and graph. (Text and the results files do not include a graph.) The dashed line (in the jpg graph) is at the level of the overall metric, stretching across the points which were averaged together to get the metric.

The hardware vendor and model name, the OS vendor and name/version, and the JVM software vendor and software name/version. Enough information to reproduce the environment.

All the other information from the Configuration description entries in the descriptive properties file.

See The Run and Reporting section on Availability for information on how to determine if results on your system meet the availability criteria for publication.

The notes and tuning entries from the descriptive properties file.

Any errors encountered in the test inputs or results. To be valid, the test must have no errors reported in this section. Any errors will cause the word 'Invalid' to appear next to the SPECjbb2005 result number (Text and HTML formats). See Section 5 for details on validity checks.

If a thread ran out of memory, it will be reported at the point in the output where the condition occurred.

For each number of warehouses, the counts of each type of transaction, along with their total and maximum response times, the percentage size of the range of transactions done by different threads, and amount of heap used, is presented. The average is not given, because it tends to be very small. You can compute it from the total and the count of transactions.

This is automatically generated by the benchmark run. You can also run

java spec.reporter.Reporter -a -e -r results/SPECjbb.<num>.raw

to get the TextI report format. This is one of the formats available on the SPECjbb2005 result pages website. It is very similar to the html, except that it is lacking the chart. It is primarily for viewing/printing on a system without a browser.

The benchmark outputs data as it runs. The results for each JVM instance are written to standard output, which has been redirected in the default script for the run script. This output also appears in the results/SPECjbb.xxx/SPECjbb.yyy.results files. The following sections appear as part of this output.

See Section 5 for a description of the runtime validity checks. Some of these cannot be checked until the end of the run. Such checks are reported at the end of the .results file.

The conformance check and its results do not go into the .results file. Next, the parameters scroll down the screen.

The results from each point in the benchmark print out the following:

Information about the minimum and maximum amount of work done by the threads/warehouses at that point.

Numerical results for each transaction type.

'Min' and 'Max' and 'Avg' are the minimum, maximum, and average response times for each of the 5 transaction types.

Count is the number of each type of transaction done during the run for this number of warehouses.

'Total' is the total time spent in this type of transaction, so Avg is Total / count.

The 'result' is the sum of the Counts divided by the number of seconds.

Calculating results

Minimum transactions by a warehouse = 470224

Maximum transactions by a warehouse = 472731

Difference (thread spread) = 2507 (0.53%)

===============================================================================

TOTALS FOR: COMPANY with 2 warehouses

................. SPECjbb2005 0.06 Results (time in seconds) .................

Count Total Min Max Avg Heap Space

New Order: 414459 223.27 0.000 2.084 0.001 total 254.1MB

Payment: 285744 75.24 0.000 2.082 0.000 used 219.7MB

OrderStatus: 28574 9.42 0.000 0.043 0.000

Delivery: 28575 61.09 0.001 0.068 0.002

Stock Level: 28574 11.51 0.000 0.042 0.000

Cust Report: 157029 81.42 0.000 0.132 0.001

throughput = 3928.64 SPECjbb2005 bops

++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++The equivalent of the text report is printed to the screen and .results file at the end of the run, summarizing it.

All the descriptive properties are echoed to the screen and results file. Also, system and JVM properties acquired by System.getProperties() are echoed.

Error messages are explained in greater detail below, listed by key words in the message.

Messages from the benchmark:

"VALIDATION ERROR" -- The JVM has produced a screen which looks different than expected for the transaction type specified in the message. Further messages show the difference between expected and produced lines in that screen. Determine why the JVM behaves differently than other JVM's. This message means the run is not publishable, even though the run proceeds.

"Out of memory" -- There was not enough memory for an allocation. Increasing your heap space is recommended to fix this. In cases with a very large number of warehouses, it may be that you need to increase your allowed number of threads in your JVM or operating system.

"Property file error" -- Some property in SPECjbb.props, SPECjbb_config.props, or the file which was specified as the properties file, was deleted, misspelled, or given a value outside of it's expected range. In most cases there is a further message indicating which one. Compare against a known good copy of the properties file. "Unrecognized property" most often indicates a misspelling. Note that booleans should be specified in lower case.

"No valid warehouse sequence specified" -- specify (and uncomment) either sequence_of_number_of_warehouses or all three of starting_warehouse_number, ending_warehouse_number, increment_warehouse_number in the properties file. Warehouse numbers (in sequence_of_number_of_warehouses) should be increasing order.

"Error opening output file" -- Check that the directory of the specified output file exists and is writable, and that there is not already a file of the given name with write permission turned off.

"INVALID: Run will NOT be compliant" -- One of the "fixed" properties was assigned a value other than that required for valid runs. See Section 4.1.

"An I/O Exception occurred" -- Check whether there is enough disk space in the output directory disk or filesystem.

"JVM Check detected error" -- This means that the small subset of JVM conformance testing failed. Further messages indicate what the discrepancy is.

"Fails validation" -- Either jbb.jar was not first in the CLASSPATH, or the checksum of jbb.jar was not the expected value. This suggests that it has been recompiled or corrupted. If it is CLASSPATH, change CLASSPATH; otherwise, reinstall the kit.

Messages in the reports:

"not compliant" -- The "fixed" parameters were not the right values for a valid run. See Section 4.1.

"JVM failed operational validity check" -- The JVM has produced a screen which looks different than expected for the transaction type specified in the message. Messages in the benchmark output (or results file) show the difference between expected and produced lines in that screen. Determine why the JVM behaves differently than other JVM's. This message means the run is not publishable, even though the run proceeded.

"conformance checking returned negative" -- The small subset of JVM conformance testing found something non-conformant.

"recompiled or jbb.jar not first in CLASSPATH" -- Either jbb.jar was not first in the CLASSPATH, or the checksum of jbb.jar was not the expected value. This suggests that it has been recompiled or corrupted. If it is CLASSPATH, change CLASSPATH; otherwise, reinstall the kit.

"Not having all required points" -- Points below the peak were missing. They are required for a publishable run.

"0's will be averaged in for points not measured" -- Points between the peak and twice the number of warehouses at peak were missing. If it is possible to collect a run including these points, the score will be higher.

"At least points 1 to 8 are required" -- Specify a sequence of warehouses including all of the numbers from 1 to 8. This is to make publications on the SPEC site more informative.

"Measurement interval failing to end in close enough time limits" -- Further messages specify whether the measurement interval for a point ran too long or to short compared to the intended amount of time, and which point. Check that there is no other load on the system being measured, and that the JVM scheduler is not starving the thread which signals the end of the interval.

Missing file separator: On systems where the file separator is \, use \\ as the file separator in the properties files.

Still have a problem that makes no sense? Contact [email protected] .

To tune the benchmark, analyze the workload and look for possible bottlenecks in the configuration. There are a number of factors or bottlenecks that could affect the performance of the benchmark on the system.

The following tuning tips are for configuring the system to generate the best possible performance numbers for SPECjbb2005. Please note that these should not necessarily be used to configure and size real world systems.

This is the critical component in determining the performance of the system for this workload. Use JVM profiling (java -prof or java -Xprof) to identify API routines most heavily called. Other tools may be available on your system.

Having extra stuff in CLASSPATH can degrade performance on some JVMs.

SPECjbb2005 uses at least a heap size of 256 and is known to benefit from additional memory. Heap size is set via the -ms/-mx (or -Xms/-Xmx) options to java in many JVMs.

The number of threads is approximately equal to the number of warehouses. For very large numbers of warehouses, you may need to increase operating system limits on threads.

At a larger number of warehouses (and hence threads), the synchronization of application methods, or the JVM's internal use of locking, may prevent the JVM from using all available CPU cycles. If there is a bottleneck without consuming 100% of CPU, lock profiling with JVM or operating system tools may be helpful.

SPECjbb2005 does not run over the network. There is a very limited network activity to sync up the multiple JVM instances using socket communication which does not affect the benchmark performance.

SPECjbb2005 does not write to disk as part of the measured workload. Classes are read from disk, of course, but that should be a negligible part of the workload.

Upon a successful run, the results may be submitted to the SPEC Java sub-committee for review by mailing the SPECjbb.<num>.raw file to [email protected]. When mailing the output properties file, include it in the body of the mail message, don't send it as an attachment, and mail only one result per email message.

Note: The SPECjbb.<num>.raw files uses the configuration and parameter information in the properties file. Please edit the properties files with the correct information prior to running the benchmark for submission.

Every submission goes through a two-week review process. During the review, members of the sub-committee may ask for further information/clarifications on the submission. Once the result has been reviewed and approved by the sub-committee, it is displayed on the SPEC web site at www.spec.org .

Home - Contact - Site Map - Privacy - About SPEC

[email protected]

Last updated: Thu April 27 12:02:02 PDT 2006

Copyright

1995 - 2006 Standard Performance Evaluation Corporation

URL:

http://www.spec.org/jbb2005/docs/UserGuide.html