SPECstorage™ Solution 2020_genomics Result

Copyright © 2016-2022 Standard Performance Evaluation Corporation

|

SPECstorage™ Solution 2020_genomics ResultCopyright © 2016-2022 Standard Performance Evaluation Corporation |

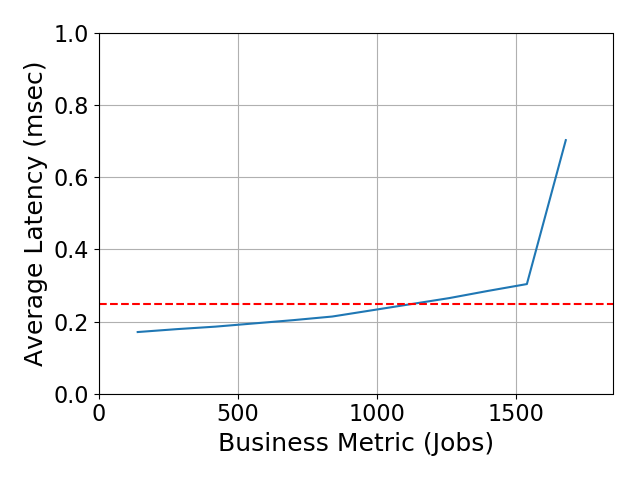

| UBIX TECHNOLOGY CO., LTD. | SPECstorage Solution 2020_genomics = 1680 Jobs |

|---|---|

| UbiPower 18000 distributed all-flash storage system | Overall Response Time = 0.25 msec |

|

|

| UbiPower 18000 distributed all-flash storage system | |

|---|---|

| Tested by | UBIX TECHNOLOGY CO., LTD. | Hardware Available | July 2022 | Software Available | July 2022 | Date Tested | September 2022 | License Number | 6513 | Licensee Locations | Shenzhen, China |

UbiPower 18000 is a new generation of ultra high performance distributed all-flash storage system, dedicated to providing high-performance data services for HPC/HPDA business, including AI and machine learning, genomics sequencing, EDA, CAD/CAE, real-time analytics, media rendering etc. UbiPower 18000 combines high-performance hyperscale NVMe SSD and Storage Class Memory with storage services all connected over RDMA networks to create low-latency, high-throughput, scale-out architecture.

| Item No | Qty | Type | Vendor | Model/Name | Description |

|---|---|---|---|---|---|

| 1 | 14 | Storage Node | UBIX | UbiPower 18000 High-Performance X Node | UbiPower 18000 High-Performance X Node, including 16 slots for U.2 drives. |

| 2 | 28 | Client Server | Intel | M50CYP2UR208 | The M50CYP2UR208 is 2U 2-socket rack server. The CPU is two Intel 32-Core [email protected] GHz. 512 GiB of system memory. Each server has 1x Mellanox ConnectX-5 100GbE dual-port network card. 1 server used as Prime Client; the other 27 servers used to generate the workload including Prime Client. |

| 3 | 56 | 100GbE Card | Mellanox | MCX516A-CDAT | ConnectX-5 Ex EN network interface card, 100GbE dual-port QSFP28, PCIe Gen 4.0 x16. |

| 4 | 224 | SSD | Samsung | PM9A3 | 1.92TB NVMe SSD |

| 5 | 2 | Switch | Huawei | 8850-64CQ-EI | CloudEngine 8850 delivers high performance, high port density, and low latency for cloud-oriented data center networks and high-end campus networks. It supports 64 x 100 GE QSFP28 ports. |

| Item No | Component | Type | Name and Version | Description |

|---|---|---|---|---|

| 1 | Clients | Client OS | Centos 7.9 | Operating System (OS) for clients in M50CYP2UR208. |

| 2 | Storage Node | Storage OS | UbiPower OS 1.1.0 | Storage Operating System |

| Storage Server | Parameter Name | Value | Description |

|---|---|---|

| Port Speed | 100Gb | Each storage node has 4x 100GbE Ethernet ports connected to the switch. |

| MTU | 4200 | Jumbo Frames configured for 100Gb ports. | Clients | Parameter Name | Value | Description |

| Port Speed | 100Gb | Each storage node has 2x 100GbE Ethernet ports connected to the switch. |

| MTU | 4200 | Jumbo Frames configured for 100Gb ports. |

None

| Clients | Parameter Name | Value | Description |

|---|---|---|

| bond | bond2 | Bond2 for Storage node 2x 100GbE interfaces of each network card. Bond2 algorithm is balance-xor. The UbiPower will configure the bonding of the storage nodes automatically. |

The single filesystem was attached via a single mount per client. The mount string used was "mount -t ubipfs /pool/spec-fs /mnt/spec-test"

None

| Item No | Description | Data Protection | Stable Storage | Qty |

|---|---|---|---|---|

| 1 | Samsung PM9A3 1.92TB used for UbiPower 18000 Storage System | 8+2 | Yes | 224 |

| 2 | Micron 480GB ssd for Storage Nodes and Clients to store and boot OS | RAID-1 | Yes | 84 |

| Number of Filesystems | 1 | Total Capacity | 308TiB | Filesystem Type | ubipfs |

|---|

Each storage node has 16x Samsung PM9A3 SSDs attached to it, which are dedicated to the UbiPower filesystem. The single filesystem consumed all of SSDs across all of the nodes.

UbiPower filesystem was created and distributed evenly across all 14 storage nodes with 8+2 EC configuration in the cluster. All data and metadata are distributed evenly across the 14 storage nodes.

| Item No | Transport Type | Number of Ports Used | Notes |

|---|---|---|---|

| 1 | 100GbE NetWork | 56 | Each client is connected to a single port of each switch |

| 2 | 100GbE NetWork | 56 | Each storage node is connected to two ports of each switch |

For each client server, two 100GbE interfaces are bonded to one port, with MTU size 4200. For each storage node, two 100GbE interfaces of each network card are bonded to one port, with MTU size 4200. PFC and ECN are configured between switches, client servers and storage nodes.

| Item No | Switch Name | Switch Type | Total Port Count | Used Port Count | Notes |

|---|---|---|---|---|---|

| 1 | CloudEngine 8850-64CQ-EI | 100GbE | 128 | 128 | 2x CloudEngine switches connected together with an 800Gb LAG which used 8 ports of each switch. Each switch has 56 ports used for client connections and 56 ports used for storage node connections. |

| Item No | Qty | Type | Location | Description | Processing Function |

|---|---|---|---|---|---|

| 1 | 56 | CPU | Client Server | Intel Xeon Gold 6338 [email protected] | UbiPower Storage Client, Linux OS, Load Generator and device driver |

| 2 | 28 | CPU | storage Node | Intel Xeon Gold 6338 [email protected] | UbiPower Storage OS |

None

| Description | Size in GiB | Number of Instances | Nonvolatile | Total GiB |

|---|---|---|---|---|

| 28x client servers with 512GB | 512 | 28 | V | 14336 |

| 14x storage nodes with 512GB | 512 | 14 | V | 7168 |

| 14x storage nodes with 2048GB of storage class memory | 2048 | 14 | NV | 28672 | Grand Total Memory Gibibytes | 50176 |

Each storage controller has main memory that is used for the operating system and caching filesystem read data. Each storage node also has storage class memory; See "Stable Storage" for more information.

In UbiPower 18000, all writes are committed directly to the nonvolatile storage class memory before being written to the NVMe SSD. All data are protected by UbiPower OS Distributed Erase Coding Protection (8+2 in this test) across the storage nodes in the cluster. In the case of storage class memory failure, data is no longer written to the storage class memory, but is written to NVMe SSD in a write-through way.

None

None

The 28 client servers are the load generators for the benchmark. Each load generator has access to the single namespace of UbiPower filesystem. The benchmark tool accesses a single mount point on each load generator. In turn each of mount point corresponds to a single shared base directory in the filesystem. The clients process the file operations, and the data requests to and from the 14 UbiPower Storage nodes.

None

None

Generated on Wed Sep 28 16:43:47 2022 by SpecReport

Copyright © 2016-2022 Standard Performance Evaluation Corporation