SPECstorage™ Solution 2020_vda Result

Copyright © 2016-2022 Standard Performance Evaluation Corporation

|

SPECstorage™ Solution 2020_vda ResultCopyright © 2016-2022 Standard Performance Evaluation Corporation |

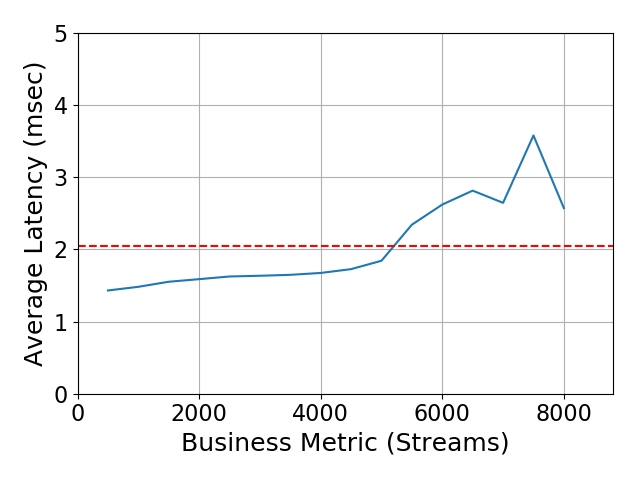

| Samsung Datacenter Technology and Cloud Solutions Lab | SPECstorage Solution 2020_vda = 8000 Streams |

|---|---|

| Samsung PM9A3 NVMe and WekaFS | Overall Response Time = 2.05 msec |

|

|

| Samsung PM9A3 NVMe and WekaFS | |

|---|---|

| Tested by | Samsung Datacenter Technology and Cloud Solutions Lab | Hardware Available | December 2021 | Software Available | December 2021 | Date Tested | December 2021 | License Number | 6309 | Licensee Locations | San Diego, California |

Our performance results show that the Weka high performance filesystem paired with Samsung’s latest datacenter NVMe SSD, the PM9A3, delivers exceptional performance optimal for a wide variety of demanding workloads found in today’s datacenters. With the Samsung PM9A3, users benefit from a combination of high performance and enterprise functionality built especially for datacenters.

| Item No | Qty | Type | Vendor | Model/Name | Description |

|---|---|---|---|---|---|

| 1 | 6 | Storage Server | Dell | R7515 | The Dell EMC PowerEdge R7515 Rack Server is a highly scalable Single-socket 2U rack server. The CPU is a single AMD EPYC 7702P 64-Core Processor @ 3.9 GHz. 512 GiB of system memory. Each storage server has 2x Mellanox ConnectX-6 200GbE dual-port network cards as well as an embedded Broadcom 1GbE Ethernet card. A Micron Technology 240GB SATA SSD is used for the Linux OS and boot. There are 15 Samsung PM9A3 3.84TB NVMe U.2 drives being used for WekaFS. |

| 2 | 8 | Client Server | Dell | R7525 | The Dell EMC PowerEdge R7525 is a two socket, 2U rack servers that is designed to run workloads using flexible I/O and network configurations. The CPUs are two AMD EPYC 7702P 64-Core Processor @ 3.9 GHz. 1 TiB of system memory. Each client server has 1x Mellanox ConnectX-6 200GbE dual-port network card as well as an embedded Broadcom 1GbE Ethernet card. A Micron Technology 240GB SATA SSD is used for the Linux OS and boot. |

| 3 | 20 | 200GbE HBA Card | Mellanox | ConnectX-6 VP 200GbE Dual-Port | ConnectX-6 Virtual Protocol Interconnect® (VPI) cards are a groundbreaking addition to the Mellanox ConnectX series of industry-leading adapter cards. Providing two ports of 200Gb/s for InfiniBand and Ethernet connectivity, sub-600ns latency and 215 million messages per second, ConnectX-6 VPI cards enable the highest performance and most flexible solution aimed at meeting the continually growing demands of data center applications. |

| 4 | 90 | SSD | Samsung | PM9A3 | PM9A3 offers tremendous performance for Read-Intensive data centers by applying PCIe® Gen 4, achieving 1000K IOPS in Random Read and 6800 MB/s in sequential read speed. Using impressively low power in small form factors (E1.S, U.2, M.2), PM9A3 delivers an efficient SSD solution for mixed data workloads. Samsung PM9A3 3.84TB NVMe U.2 drives were used in this test. |

| 5 | 2 | Switch | Mellanox | SN3700 | Mellanox SN3700 spine/super-spine offers 32 ports of 200GbE in a compact 1U form factor. It enables connectivity to endpoints at different speeds and carries a throughput of 12.8Tb/s, with a landmark 8.33Bpps processing capacity. As an ideal spine solution, the SN3700 allows maximum flexibility, with port speeds spanning from 10GbE to 200GbE per port. |

| Item No | Component | Type | Name and Version | Description |

|---|---|---|---|---|

| 1 | WekaFS | File System | 3.12.2 | WekaFS is a fully-distributed, parallel file system that was written entirely from scratch to deliver the highest-performance file services by leveraging NVMe flash. The software also includes integrated tiering that seamlessly expands the namespace to and from hard disk drive (HDD) object storage, without the need for special data migration software or complex scripts; all data resides in a single namespace for easy access and management. |

| 2 | Storage Node | Storage OS | Ubuntu 20.04 | Operating System (OS) for 6 storage servers in Dell R7515. |

| 3 | Clients | Storage OS | Ubuntu 20.04 | Operating System (OS) for 8 clients in Dell R7525. |

| Storage Server | Parameter Name | Value | Description |

|---|---|---|

| Port speed | 200Gb | Each storage server has 4x 200GbE ethernet ports connected to the switch. |

| MTU | 9000 | Jumbo frames of MTU 9000 bytes have been configured on the 200GbE connections. | Clients | Parameter Name | Value | Description |

| Port speed | 200Gb | Each client has 2x 200GbE ethernet ports connected to the switch. |

| MTU | 9000 | Jumbo frames of MTU 9000 bytes have been configured on the 200GbE connections. |

None

| Clients | Parameter Name | Value | Description |

|---|---|---|

| num_cores | 8 | WekaFS mount option to designate the number of frontend cores to allocate for the client. |

| memory_mb | 30720 | WekaFS mount option to designate amount of memory to be used by the client (for huge pages). |

| net | ens3f0,ens3f1 | WekaFS mount option to designate both client 200GbE network ports be used for the mount. |

The single filesystem was attached via a single mount per client. The mount string used was "sudo mount -t wekafs -o net=ens3f0,net=ens3f1 -o num_cores=8,memory_mb=30720 11.11.208.72/weka_perf /mnt/wekamount/"

None

| Item No | Description | Data Protection | Stable Storage | Qty |

|---|---|---|---|---|

| 1 | Total of 90x Samsung PM9A3 3.84TB SSDs used for Weka File-System. | Yes | 90 | |

| 2 | Total of 6x Micron Technology 240GB SATA SSD used for boot. | Yes | 6 |

| Number of Filesystems | 1 | Total Capacity | 188 TiB | Filesystem Type | WekaFS |

|---|

Each storage server has 15x Samsung PM9A3 attached to it, which are dedicated to the Weka filesystem. Each storage server has 1x Micron Technology 240GB SATA SSD used as the boot drive. The storage cluster consisted of 6 storage servers with a single WekaFS filesystem created in the cluster.

WekaFS is a fully containerized storage OS, in this configuration 3 LXC containers containing WekaFS storage processing were deployed per storage server.

| Item No | Transport Type | Number of Ports Used | Notes |

|---|---|---|---|

| 1 | 200GbE Network | 16 | Each client server is connected to the switch via a dual-port 200GbE HBA. |

| 2 | 200GbE Network | 24 | Each storage server is connected to the switch via 2x dual-port 200GbE HBA. |

None

| Item No | Switch Name | Switch Type | Total Port Count | Used Port Count | Notes |

|---|---|---|---|---|---|

| 1 | Mellanox SN3700 | 200GbE | 64 | 40 | 2x Mellanox SN3700 switches connected together with a 600Gb LAG. Each switch has 8 ports used for client connections and 12 ports used for target server connections. |

| Item No | Qty | Type | Location | Description | Processing Function |

|---|---|---|---|---|---|

| 1 | 16 | CPU | Client Server | AMD EPYC 7702P 64-Core Processor | Each client Dell R7525 has a dual socket AMD EPYC 7702P 64-Core 3.9GHz processor. |

| 2 | 6 | CPU | Storage Server | AMD EPYC 7702P 64-Core Processor | Each Dell R7515 storage server has a single socket AMD EPYC 7702P 64-Core 3.9GHz processor. |

None

| Description | Size in GiB | Number of Instances | Nonvolatile | Total GiB |

|---|---|---|---|---|

| 8x client servers Dell R7525 with 1024GB of memory. | 1024 | 8 | V | 8192 |

| 6x storage servers Dell R7515 with 512GB of memory. | 512 | 6 | V | 3072 | Grand Total Memory Gibibytes | 11264 |

None

WekaFS does not use any internal memory to temporarily cache write data to the underlying storage system. All writes are commited directly to the storage disk, and protected via WekaFS Distributed Data Protection (4+2 in this case). There is no need for any RAM battery protection. In an event of a power failure a write in transit would not be acknowledged.

The system under test consisted of the 8 client servers and 6 storage servers. The 6 storage servers each have 2x dual-port network interfaces connected to a 200GbE switch. The 8 load generating client servers each have a dual-port network interface also connected to a 200GbE switch.

None

One of the 8 client servers acted as benchmark's prime client to initiate the tests. The benchmark load is distributed accross the 8 Dell R7525 client servers using a single mount point per client.

None

The solution under test was a standard WekaFS cluster in dedicated server mode. The solution will handle both large file I/O as well as small file random I/O and metadata intensive applications. No specialized tuning is required for different or mixed use workloads. None of the components used to perform the test were patched with Spectre or Meltdown patches (CVE-2017-5754, CVE-2017-5753, CVE-2017-5715).

Generated on Mon Jan 10 17:59:06 2022 by SpecReport

Copyright © 2016-2022 Standard Performance Evaluation Corporation